+25

שנים

75

מדינות

1400+

אירגונים וחברות

+25 שנים

74 מדינות

+1400 אירגונים וחברות

לקוחותינו

סיפורי הצלחה

סיפורי הצלחה

שירותי החדשנות שלנו

הטמעת תרבות של חדשנות בארגון

כשאתם אומרים שאתם רוצים שהחברה שלכם תהיה יותר חדשנית, אנו מפרשים זאת כ… קראו עוד

תוכניות הכשרה

אם אתם מעוניינים לרכוש לעצמכם מיומנויות בחדשנות וחשיבה יצירתית, או שאתם רוצים לבסס יכולות חדשנות בצוות או בארגון שלכם , ל-SIT יש תכניות הכשרה שיתאימו בדיוק לכם…. קראו עוד

פיתוח שירותים ומוצרים חדשים

המציאו מוצרים ושירותים חדשניים ופורצי דרך… קראו עוד

קורסי חדשנות אונליין

למדו חדשנות בכל זמן ובכל מקום… קראו עוד

פתרון בעיות

ייצרו פתרונות חדשניים לכל בעיה, גם הקשה והמורכבת ביותר… קראו עוד

התייעלות (Productivity)

שימרו על יתרון תחרותי באמצעות התייעלות… קראו עוד

שירותי החדשנות שלנו

הטמעת תרבות של חדשנות בארגון

כשאתם אומרים שאתם רוצים שהחברה שלכם תהיה יותר חדשנית, אנו מפרשים זאת …

תוכניות הכשרה

אם אתם מעוניינים לרכוש לעצמכם מיומנויות בחדשנות וחשיבה יצירתית, או שאתם רוצים לבסס יכולות חדשנות בצוות או בארגון שלכם , ל-SIT יש תכניות הכשרה שיתאימו בדיוק לכם…. קראו עוד

פיתוח שירותים ומוצרים חדשים

המציאו מוצרים ושירותים חדשניים ופורצי דרך… קראו עוד

קורסי חדשנות אונליין

למדו חדשנות בכל זמן ובכל מקום… קראו עוד

פתרון בעיות

ייצרו פתרונות חדשניים לכל בעיה, גם הקשה והמורכבת ביותר… קראו עוד

התייעלות (Productivity)

שימרו על יתרון תחרותי באמצעות התייעלות… קראו עוד

השיטה

התרומה המשמעותית של חשיבה המצאתית שיטתית לעולם החדשנות הינה מתודולוגיה שמסייעת לאנשים לשבור את תבניות החשיבה שלהם בכדי לייצר רעיונות חדשניים על פי דרישה, ולהוציא אותם לפועל. ב- 22 שנות פיתוח ויישום, הורחבה המתודולוגיה כדי לתת מענה לכל ההיבטים האסטרטגיים של חדשנות ארגונית: מרכישת מיומנויות ליצירת תרבות ופרקטיקה של חדשנות ועד לפיתוח מודלים עסקיים חדשים.

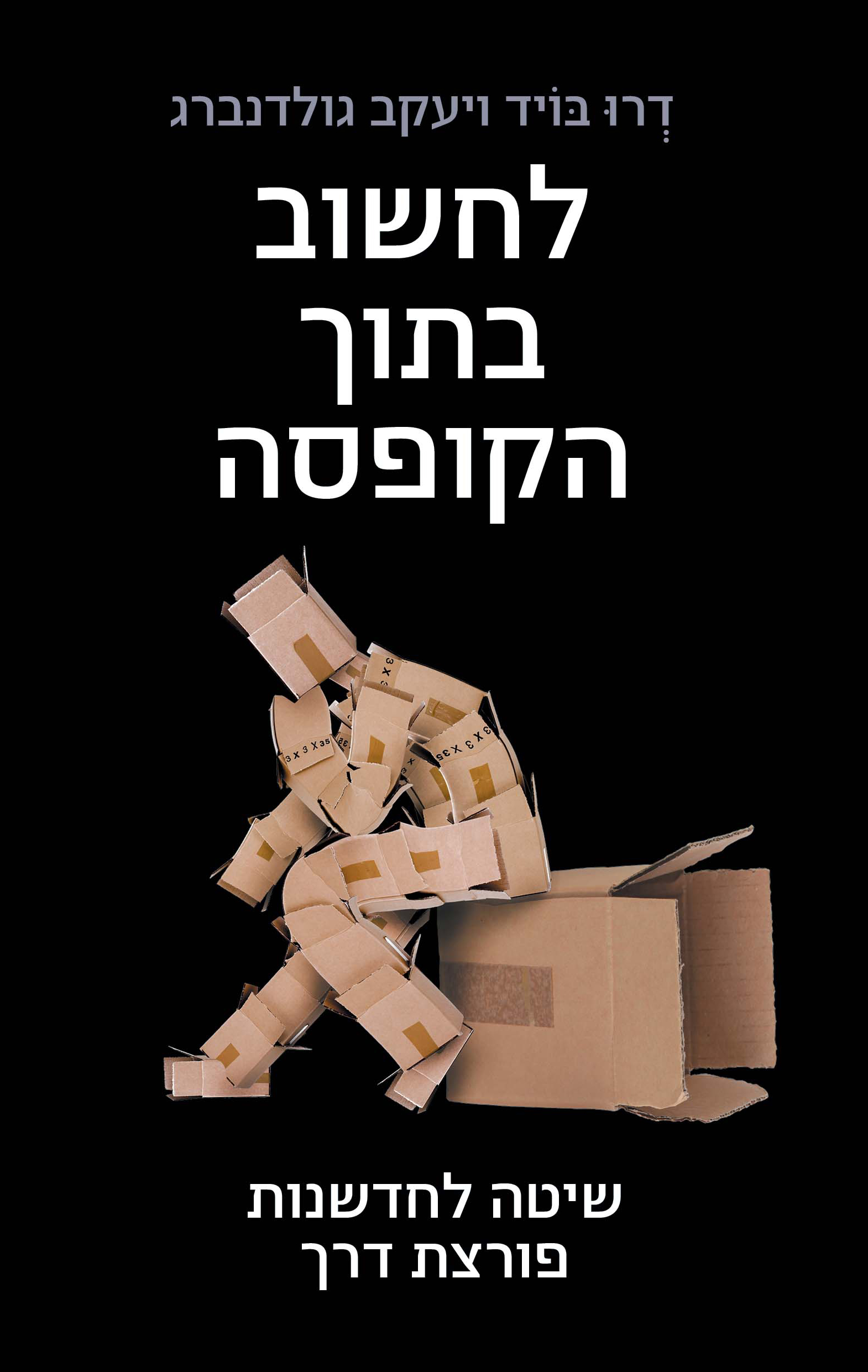

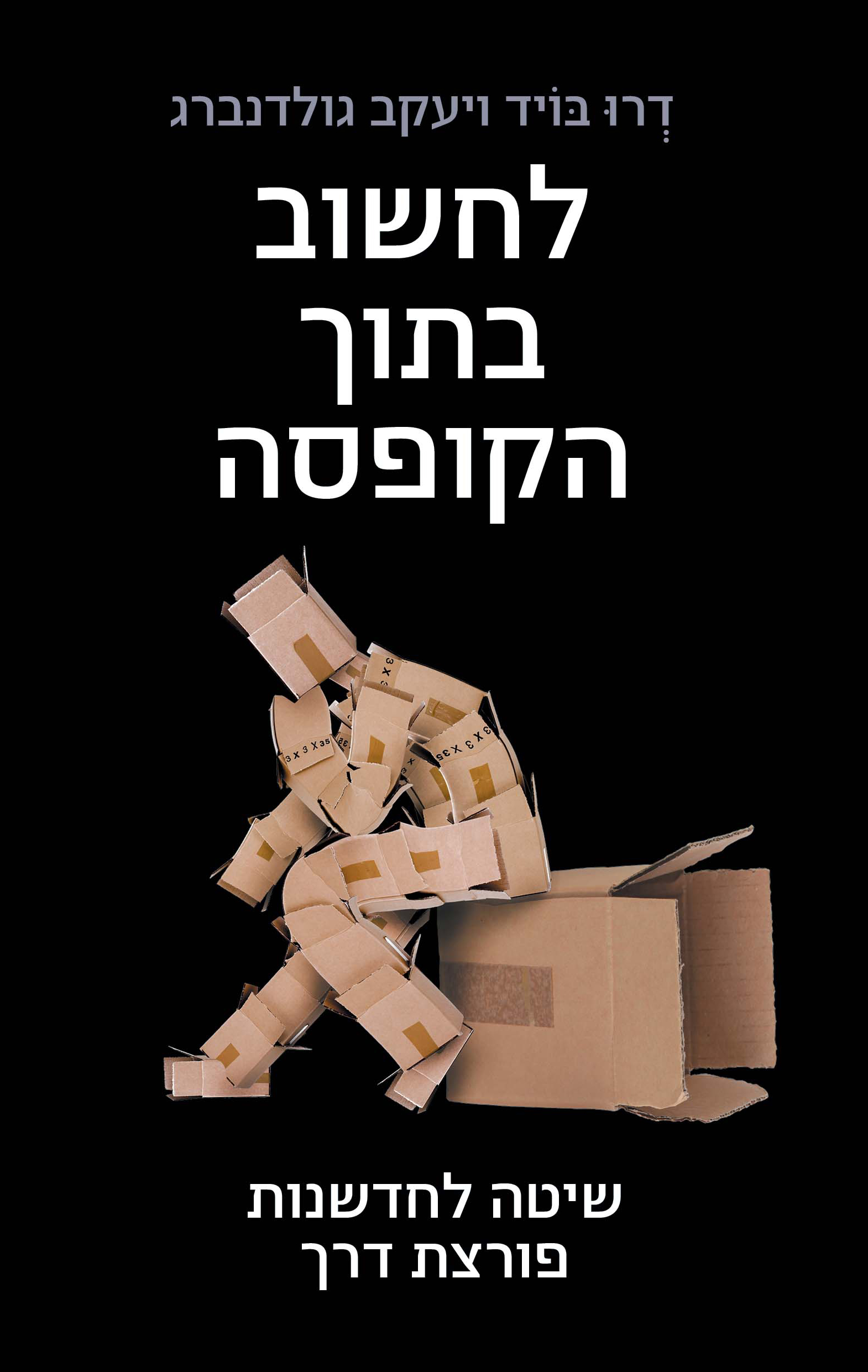

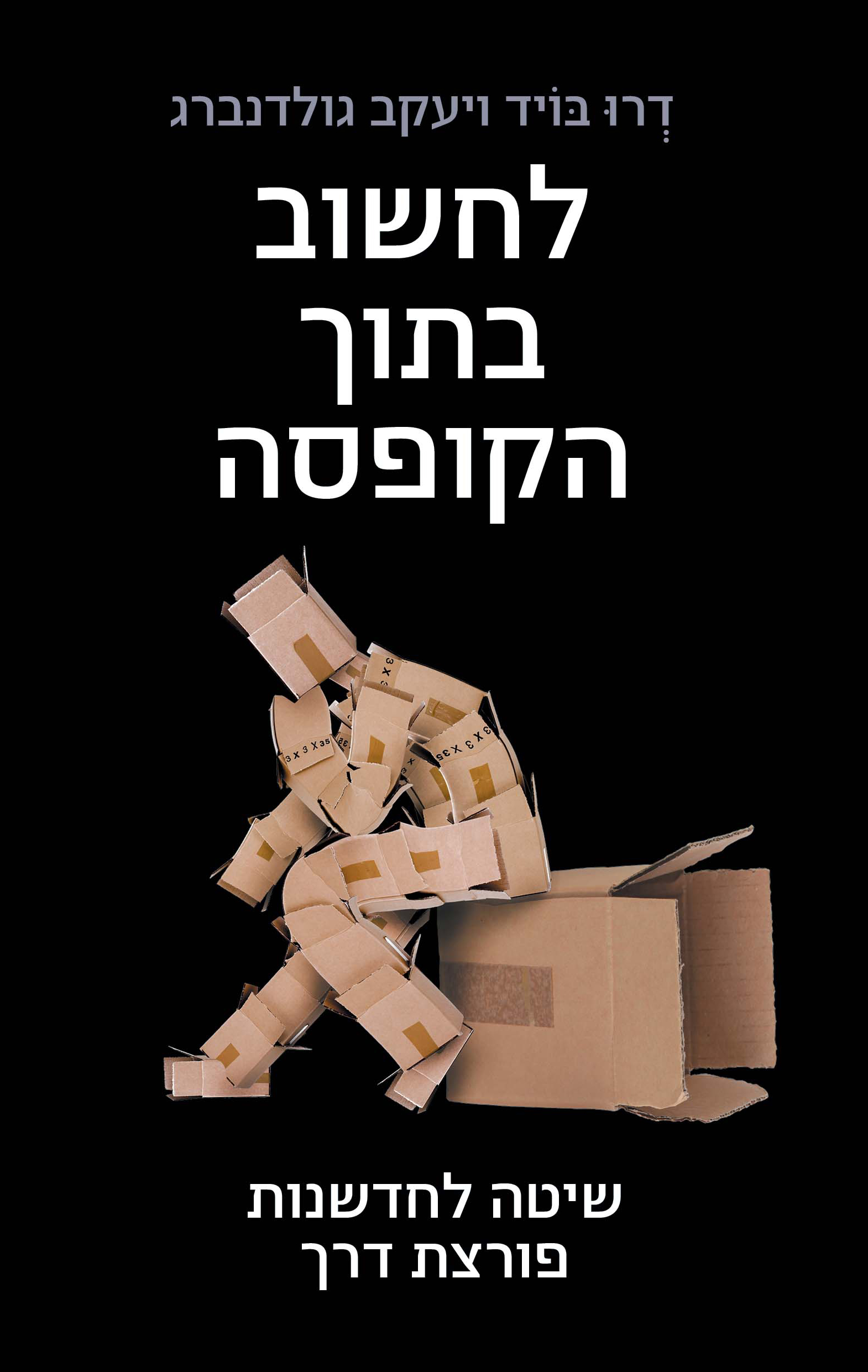

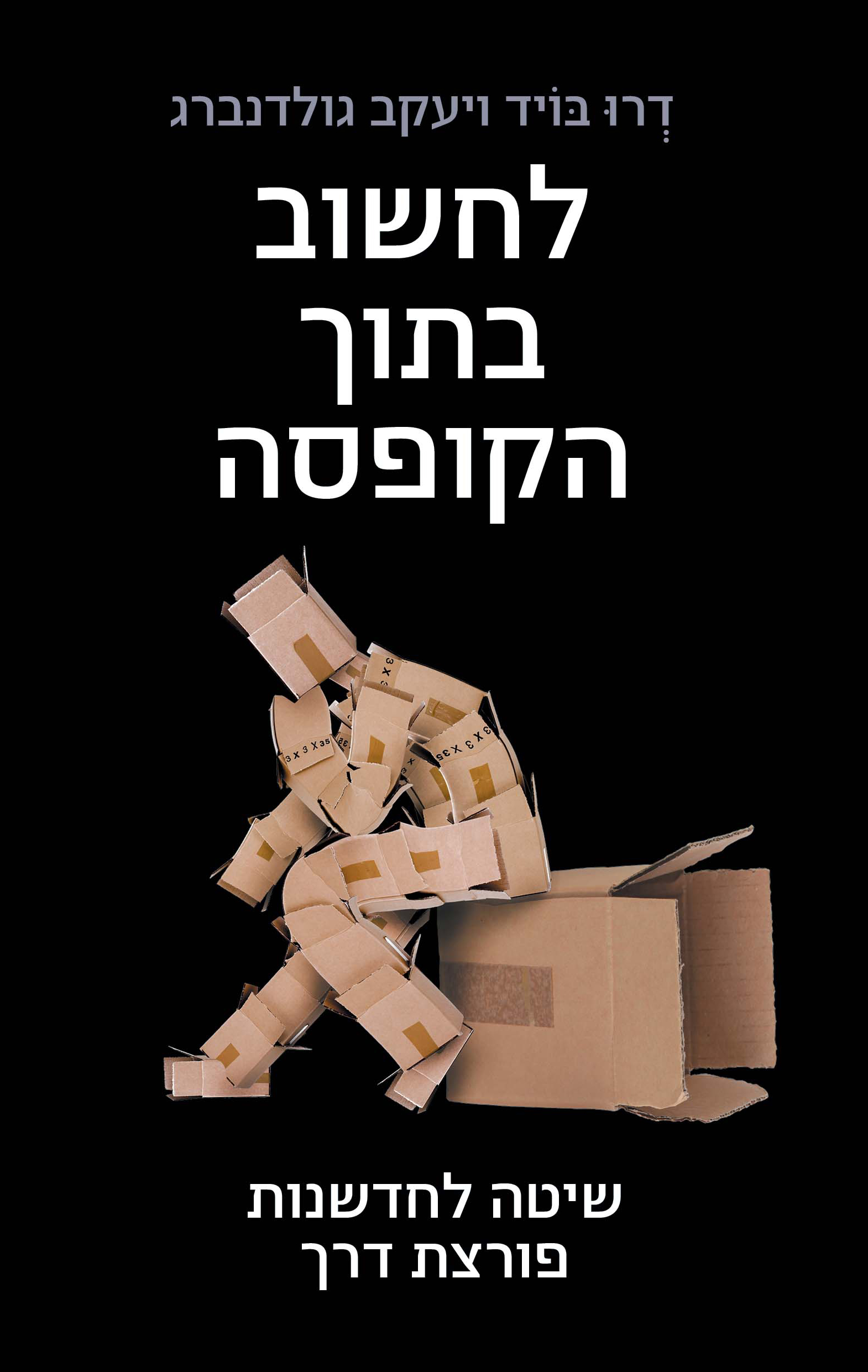

לחשוב בתוך הקופסה

רוצים ארגון יצירתי באמת? אם כן, חִשבו בתוך הקופסה. על פי הגישה המסורתית, היצירתיות אינה מובנית ואינה כפופה לכללים או לדפוסים, וכדי להיות מקוריים וחדשנים באמת עליכם לחשוב מחוץ לקופסה

לחשוב בתוך הקופסה

רוצים ארגון יצירתי באמת? אם כן, חִשבו בתוך הקופסה. על פי הגישה המסורתית, היצירתיות אינה מובנית ואינה כפופה לכללים או לדפוסים, וכדי להיות מקוריים וחדשנים באמת עליכם לחשוב מחוץ לקופסה

השיטה

התרומה המשמעותית של חשיבה המצאתית שיטתית לעולם החדשנות הינה מתודולוגיה שמסייעת לאנשים לשבור את תבניות החשיבה שלהם בכדי לייצר רעיונות חדשניים על פי דרישה, ולהוציא אותם לפועל. ב- 22 שנות פיתוח ויישום, הורחבה המתודולוגיה כדי לתת מענה לכל ההיבטים האסטרטגיים של חדשנות ארגונית: מרכישת מיומנויות ליצירת תרבות ופרקטיקה של חדשנות ועד לפיתוח מודלים עסקיים חדשים.

השיטה

התרומה המשמעותית של חשיבה המצאתית שיטתית לעולם החדשנות הינה מתודולוגיה שמסייעת לאנשים לשבור את תבניות החשיבה שלהם בכדי לייצר רעיונות חדשניים על פי דרישה, ולהוציא אותם לפועל. ב- 22 שנות פיתוח ויישום, הורחבה המתודולוגיה כדי לתת מענה לכל ההיבטים האסטרטגיים של חדשנות ארגונית: מרכישת מיומנויות ליצירת תרבות ופרקטיקה של חדשנות ועד לפיתוח מודלים עסקיים חדשים.